20.11.2023

Scientists are turning to artificial intelligence (AI) to view the Sun's poles - or at least produce an educated guess of what the Sun's poles might look like, since they've never been observed before.

"The best way to see the solar poles is obviously to send more satellites, but that is very expensive," said Benoit Tremblay, a researcher at the U.S. National Science Foundation (NSF) National Center for Atmospheric Research (NCAR). "By taking the information we do have, we can use AI to create a virtual observatory and give us a pretty good idea of what the poles look like for a fraction of the cost."

The new technique will also help researchers model a 3D Sun. This will provide a more complete image of our closest star and how its radiation impacts sensitive technologies on Earth like satellites, the power grid, and radio communications.

Currently, observations of the Sun are limited to what is seen by satellites, which are mainly constrained to viewing the star from its equator. The proposed AI observations provide a missing link, enabling scientists to improve our understanding of the Sun's dynamics and connect that knowledge to what we know about other stars.

Tremblay began working on this challenge through the Frontier Development Lab, a public-private partnership that accelerates AI research. The event was essentially an eight-week research sprint that brings academia and industry experts together to tackle interesting science questions. He was assigned to a team tasked with exploring whether AI could be used to generate new perspectives of the Sun from available satellite observations.

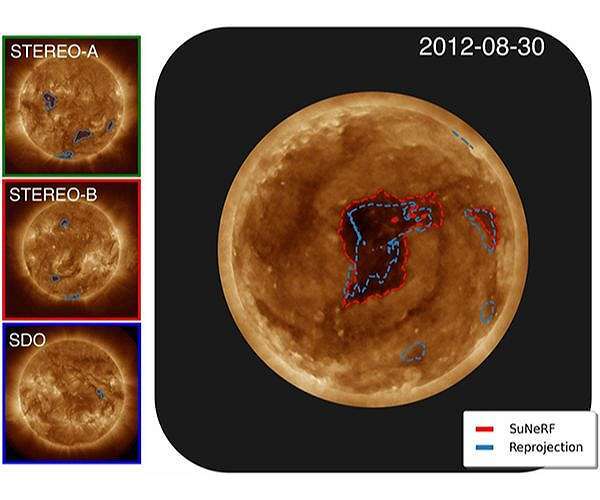

To do this, Tremblay and his colleagues turned to Neural Radiance Fields (NeRFs), which are neural networks that take 2D images and turn them into complex 3D scenes. Because NeRFs have never been used on extreme ultraviolet (EUV) images of plasma, a type of observation that is useful for studying the solar atmosphere and catching solar flares and eruptions, the researchers had to adapt the neural networks to match the physical reality of the Sun. They named the result Sun Neural Radiance Fields, or SuNeRFs.

The group trained SuNeRFs on a time series of images captured by three EUV-observing satellites viewing the Sun from different angles. Once the neural network was able to accurately reconstruct the Sun's past behavior for areas with satellite coverage, the researchers had a 3D model of the star that could be used to approximate what the solar poles looked like during that time period.

Tremblay co-authored a paper with his international team that details their process and the importance of their work. While the model produced by AI is only an approximation, the novel perspectives still provide a tool that can be used in studying the Sun and informing future solar missions.

Currently, there are no dedicated missions to study the Sun's poles. Solar Orbiter, a European Space Agency mission that will take close-up pictures of the Sun, will fly near the poles and help validate SuNeRFs as well as refine reconstructions of the poles. In the meantime, Tremblay and his fellow researchers are planning to use NSF NCAR's supercomputer, Derecho, to increase the resolution of their model, explore new AI methods that can improve the accuracy of their inferences, and develop a similar model for Earth's atmosphere.

"Using AI in this way allows us to leverage the information we have, but then break away from it and change the way we approach research," said Tremblay. "AI changes fast and I'm excited to see how advancements improve our models and what else we can do with AI."

Quelle: SD