7.01.2018

— Almost 50 years after the first humans launched to the moon, researchers in Dallas have taken a new look at NASA's Apollo missions.

Or more appropriately, a new listen.

Scientists at the University of Texas at Dallas have worked to make thousands of hours of previously-archived Apollo program audio accessible to the public — transferring and transcribing it from near-obsolete analog tapes.

NASA recorded all the inflight and ground communications between the astronauts, mission controllers and backroom support specialists during the lunar flights of the late 1960s and early 1970s. While many of the Apollo crew members' calls and some of the replies from mission control are well known today, the majority of the conversations that made the missions not just possible, but the successes that they were, have gone almost entirely unheard by the public.

"When one thinks of Apollo, we gravitate to the enormous contributions of the astronauts," John Hansen, director and founder of the Center for Robust Speech Systems (CRSS) in the Erik Jonsson School of Engineering and Computer Science, said in a statement released by the University of Texas. "However, the heroes behind the heroes represent the engineers, scientists and specialists who brought their experience together collectively to ensure the success of the Apollo program."

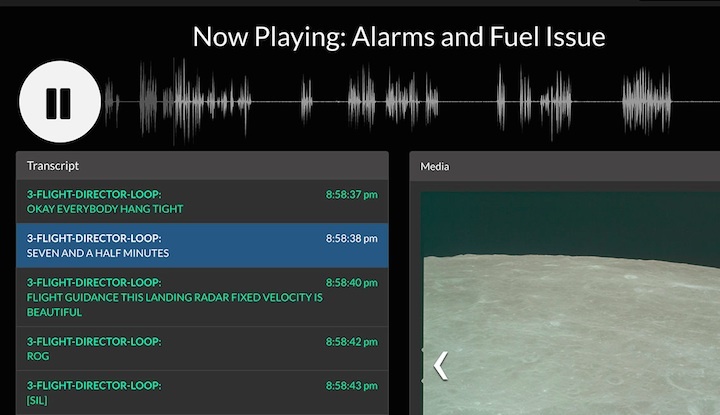

The Explore Apollo website will provide the public with full access to NASA's Apollo audio archives.

-

In 2012, the researchers at the CRSS received a National Science Foundation grant to devise the speech-processing techniques needed to reconstruct and transform the NASA audio archive. The result, created in collaboration with the University of Maryland, is the "Explore Apollo" website that will provide the public with access to the recordings.

The project, five years in the making, was more complex than it might sound.

The original recordings were captured on more than 200 14-hour analog tapes, each with 30 tracks of audio of up to 35 people speaking. The Explore Apollo project members, including Hansen and a team of doctoral students, had to develop algorithms to process, recognize and analyze the audio to determine who said what and when.

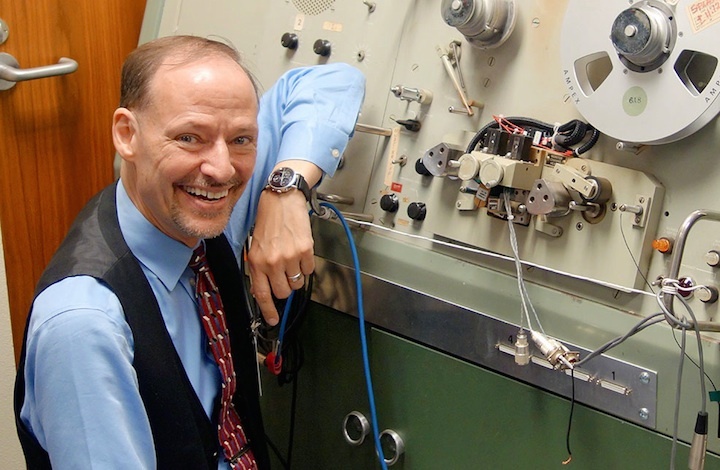

First though, they needed a way to digitize the tapes. The only existing means to play the reels was something called a SoundScriber, a piece of audio equipment dating back to before the Apollo missions themselves.

"NASA pointed us to the SoundScriber," said Hansen, "and said do what you need to do."

The issue was that it could read only one track at a time. The user had to mechanically rotate a handle to move the tape read head from one track to another. At that rate, it would take at least 170 years to digitize just the Apollo 11 mission audio using the SoundScriber, said Hansen.

"We couldn't use that system, so we had to design a new one," Hansen stated. "We designed our own 30-track read head, and built a parallel solution to capture all 30 tracks at one time."

"This is the only solution that exists on the planet," he said.

Once they the tapes could be read, the next challenge was understanding the audio so it could be transcribed.

The Explore Apollo team had to create software that could detect speech activity, including tracking each person who was speaking, what they said and when. They also wanted to track speaker characteristics to help other researchers analyze how people react in tense situations.

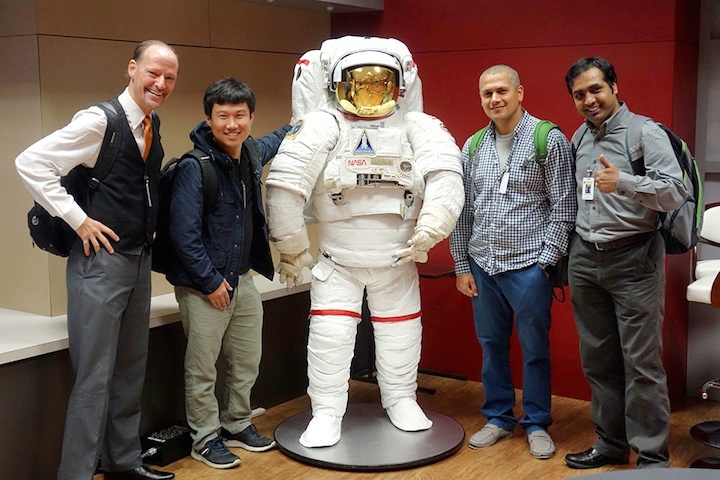

From left, John H.L. Hansen, Chengzhu Yu, Abhijeet Sangwan and Lakshmish Kaushik, seen here at Johnson Space Center, oversaw the Explore Apollo project. (UT Dallas)

-

In addition, the tapes included audio from various channels that needed to be placed in chronological order. And they included a vocabulary that was unique to the situation.

Commercial speech recognition software was ill-equipped to recognize the myriad of NASA acronyms and spacecraft terminology.

"We drew from thousands of books and manuscripts from NASA," Hansen told KERA Public Radio. "We pulled close to 4.5 billion words from NASA archives of text in order to build a language model that would characterize the types and words that they would use for the Apollo missions."

"Without that, the speech recognition would never be able to work on this data," he said.

The Explore Apollo website currently presents a sample of the processed audio from Apollo 11, the first moon landing. When complete, the project will encompass the audio from all of Apollo 11, as well as most of the Apollo 13, Apollo 1 and Gemini 8 missions.

Quelle: CS